Beyond the Monolithic Prompt: Why We Built Agent Flows for Voice AI

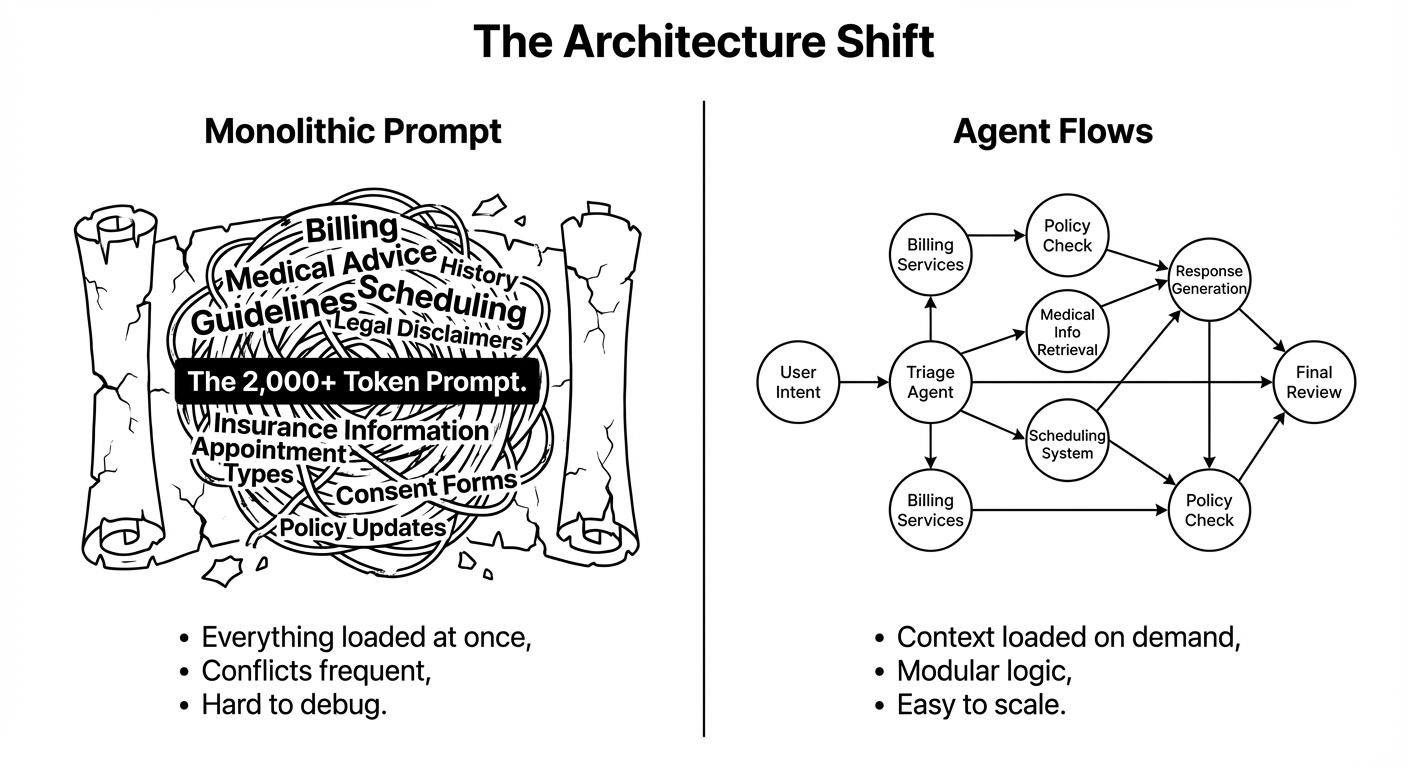

Traditional voice AI agents rely on massive 2,000+ token prompts that create latency, context degradation, and debugging nightmares. Agent Flows restructure conversations as directed graphs, reducing tokens by 70% and latency by 50%.

For the past 6 months, building production-ready voice AI meant writing unmaintainable 2,000+ token prompts. Agent Flows change that fundamentally.

At Osvi AI, we've built hundreds of voice agents for global businesses - from healthcare clinics managing millions of USD in annual revenue to trading platforms scaling their support operations. Every single one started the same way: with a massive prompt that tried to be everything to everyone.

We're done with that approach.

The Problem with Monolithic Prompts

Let's say you're building a front desk agent for a clinic. Your prompt needs to handle:

- Appointment scheduling for 12 different doctors

- Insurance/TPA verification processes

- Billing and payment collection

- Pharmacy prescription orders

- Post-discharge follow-ups

- General inquiries about clinic timings and address

The traditional approach? Pack all of this into one giant prompt and pass it to the LLM on every turn of the conversation.

Here's what actually happens:

1. Token Overhead Kills Latency

Every time the agent needs to respond - literally every turn in the conversation - the entire prompt token gets processed again along with the growing chat history.

When a patient calls asking, "Which doctors are available tomorrow?", why are we passing the entire billing process, handling tokens to the LLM? We're not just wasting tokens; we're adding 200-400ms of latency because the model has to process irrelevant context.

In voice AI, latency isn't just a metric - it's the difference between "this feels like talking to a person" and "this feels like talking to a machine."

2. Context Degradation at Scale

As conversations extend past 8-10 minutes, agent performance degrades dramatically.

We've observed that when context window usage crosses 70-80%, agents start hallucinating. They forget what was discussed earlier in the call. A patient who mentioned their preferred doctor 5 minutes ago suddenly needs to repeat themselves.

Why? Because the LLM is trying to process that massive prompt + the growing conversation history, and the older context gets progressively deprioritized. The bigger your prompt, the faster you hit this degradation point.

3. Impossible to Debug and Iterate

When something goes wrong with a monolithic prompt, good luck figuring out which section caused the issue. Was it the personality guidelines conflicting with the edge case handling? Did the billing instructions somehow interfere with appointment scheduling?

You end up playing prompt whack-a-mole: fix one thing, break another. A change meant to improve TPA verification accidentally makes the agent too formal during general inquiries.

How Agent Flows Work

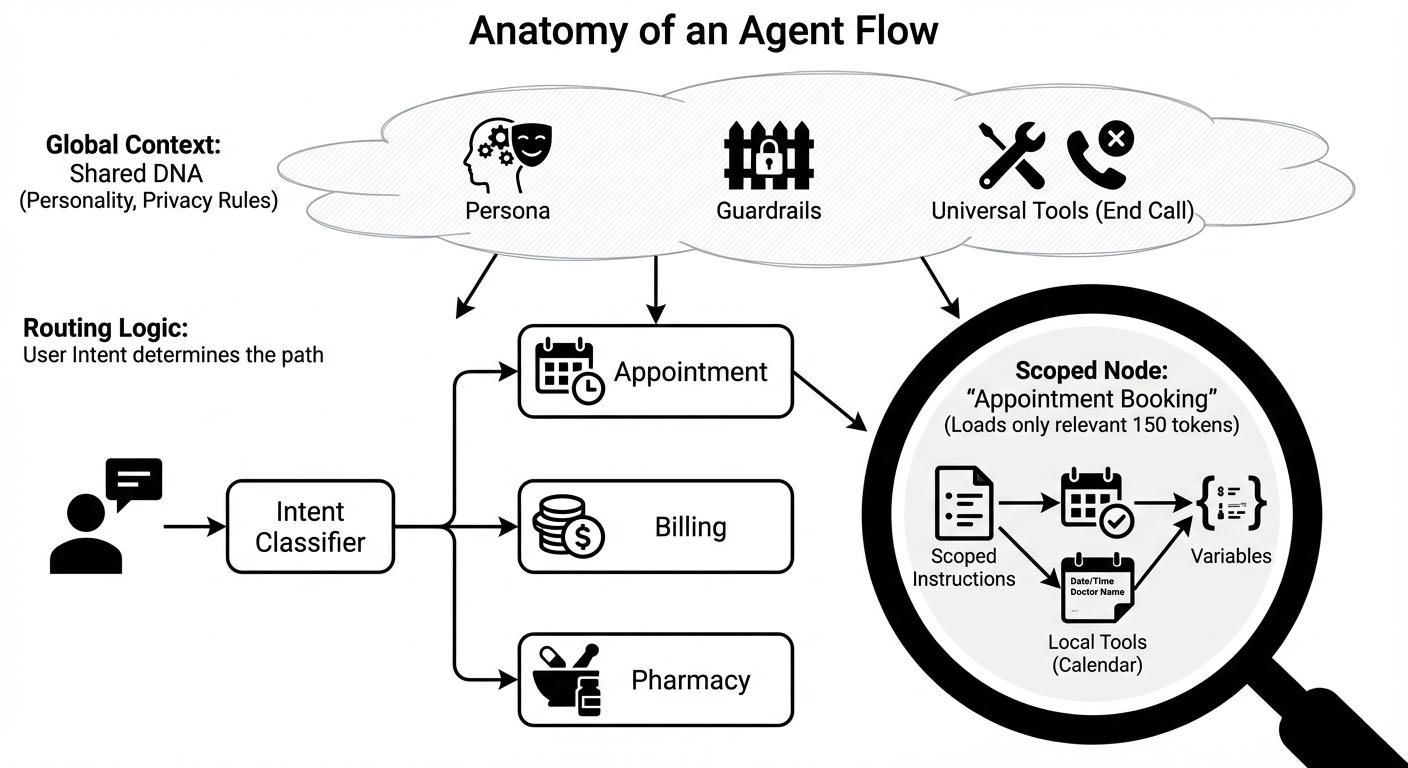

Agent Flows restructure the entire conversation as a directed graph of nodes and edges.

Global Layer: What Every Node Shares

You define global properties that apply throughout:

- Agent Objective: "You are a professional front desk agent for Dr. Sharma's Clinic"

- Personality Traits: Warm, patient, uses respectful language

- Guardrails: No medical advice, data privacy rules, always answer from knowledge base

- Universal Tools: end_call(), transfer_to_human(), send_voicemail()

This global context is lean - maybe 150-200 tokens instead of 2,000 depending on your use-case.

Nodes: Scoped Intelligence

Each node represents a specific phase of the conversation with:

- Scoped Instructions: Only what's needed for this specific task

- Node-Specific Tools: Only the functions relevant to this step

- Variables: Data that flows to subsequent nodes

Example node for appointment booking:

Instructions: "Help the patient schedule an appointment. Ask for preferred date,

doctor, and reason for visit. Check doctor availability using the calendar tool."

Tools: check_doctor_availability(), book_appointment(), get_patient_history()

Output Variables: appointment_date, doctor_name, visit_reason

That's maybe 100-150 tokens, versus the 2,000-token monolith.

Edges: Intelligent Routing

Edges define how the conversation flows between nodes based on:

- User Intent: "I want to pay my bill" → Routes to Billing Node

- Conditional Logic: If payment_amount > $10,000 → Route to manager approval

- Context State: If appointment_booked == true → Route to confirmation

The agent only loads the context it needs, when it needs it.

The Technical Advantages

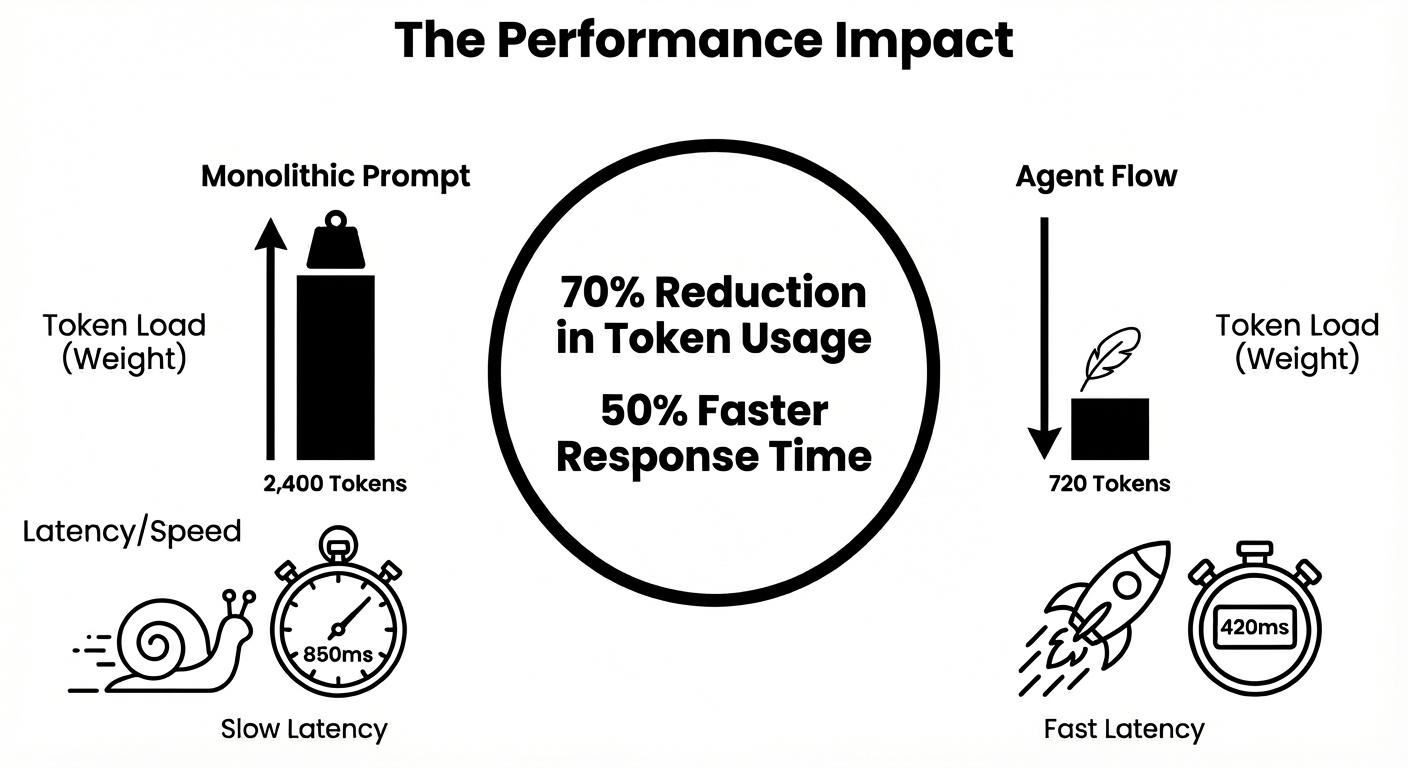

Dramatic Token Reduction

Instead of processing 2,000 tokens per turn, you're processing:

- 200-400 tokens (global context)

- 150 tokens (current node instructions)

- Growing chat history (unavoidable)

Real example from our healthcare client:

- Before Agent Flows: Average 2,400 tokens per turn → 850ms latency

- After Agent Flows: Average 720 tokens per turn → 420ms latency

That's a 70% reduction in tokens and 50% reduction in latency. Users feel the difference.

Context Retention Through Variables

Instead of hoping the LLM remembers what was discussed 10 minutes ago, you explicitly pass critical data through variables:

Node 1 (Appointment Booking):

Collects: patient_name, doctor_preference, appointment_date

Node 2 (Insurance Verification):

Receives: patient_name, appointment_date

Uses: "I see you have an appointment with Dr. Sharma on [appointment_date]"

The context degradation problem? Largely solved. You're not relying on the LLM's context window - you're programming explicit state management.

Controlled Conversation Flow

In a single-prompt agent, the LLM decides the entire conversation flow through probabilistic generation. Sometimes it works beautifully. Sometimes it goes off the rails in ways you can't predict.

With Agent Flows, you're designing the conversation architecture:

- Greeting → Intent Classification → Route to appropriate node

- Complete task → Offer related service or end call

- Error state → Escalate to human transfer

You maintain the flexibility of conversational AI while adding the reliability of deterministic routing.

Script Adherence Without Rigidity

Traditional IVR systems are rigidly scripted but feel robotic. Pure LLM agents are natural but unpredictable.

Agent Flows give you the best of both worlds:

Within each node, the agent uses natural language to accomplish the task. It can handle variations in how users express intent, clarify ambiguities, and have natural back-and-forth.

Between nodes, the conversation follows explicit guardrails. The agent can't randomly jump from appointment booking to discussing billing without going through the proper routing logic.

Result: Conversations that feel natural but stay on track.

Who This Is For

Non-Technical Teams: You don't need to be a prompt engineering expert. Design the conversation flow visually, define what each step should accomplish, and let the system handle the complexity.

Developers Maintaining Complex Agents: Instead of a 3,000-token prompt with 47 nested conditional statements, you have a clear graph structure that's maintainable and debuggable.

Businesses Scaling Voice AI: When you need to add a new service or modify how something works, you update specific nodes instead of re-engineering the entire prompt.

Looking Ahead: Multi-Agent Orchestration

Agent Flows are the foundation for something even more powerful: multi-agent systems.

Think about calling your bank's helpline. You dial a general number, explain your issue, and get transferred to the credit card department, investment team, or loan specialist.

That's not one agent trying to do everything - it's specialized agents handling their domain expertise.

We're building toward a future where each node can be its own specialized agent:

- Intake Agent: Understands your query, gathers context

- Billing Agent: Expert in payment processing and financial queries

- Support Agent: Handles technical troubleshooting

- Escalation Agent: Manages complex cases requiring human intervention

Each agent maintains its own knowledge base, tools, and optimization. The system orchestrates handoffs with full context transfer.

This is how we'll handle the truly complex use cases - not by making one agent do everything, but by letting specialized agents collaborate.

Why This Matters for Businesses

For businesses deploying voice AI, Agent Flows solve critical challenges:

Multilingual Complexity: Different nodes can have different language preferences. Your greeting might be in Hinglish, while billing can be more formal Hindi or English based on user preference.

Compliance Requirements: Industry-specific compliance rules can be enforced at the flow level, not buried in prompt clauses.

Scalability with Control: As your business grows and use cases expand, you add nodes rather than bloating a single prompt.

At Osvi AI, we built this because we needed it. Our clients were hitting the walls of monolithic prompts, and we saw the future: modular, maintainable, high-performance voice AI that scales with business complexity.

Agent Flows aren't just a feature - they're how voice AI should work.